Basic implementation of GAN

Idea

The generator generates fake samples. The goal is to make this fake sample as realistic as possible.

The discriminator takes the output of generator as input. The goal is to classify whether the input is fake or real.

Discrimator

The network is a binary classifier to predict whether the input is fake or real. The final layer is a softmax operator. The total loss function is (loss(real_input, gt) + loss(fake_input, gt)) / 2

It is important to not update the parameters of generator while optimizing the discriminator. In the snippet, this is done by detaching the “fake” from the graph. The loss is nn.BCEWithLogitsLoss()

Snippet of discriminator’s loss calculation:

where,

criterion = BCE loss

0 – Fake image

1 – Real image

Generator

The network takes in a noise vector and output a vector equal to the dimension of an image. The loss is nn.BCEWithLogitsLoss()

Snippet of generator’s loss calculation:

where, criterion = BCE loss

The problem of BCE loss in GAN

The generator tries to maximizes the BCE loss i.e. it is creating fake image such that it looks realistic. Meanwhile the discriminator minimizes the BCE loss. It is easier for discriminator to converge faster and starts predicting the generated image as fake. This provides no signal/gradient for discriminator causing vanishing gradient in deeper networks.

W loss

W loss solves the problem of BCE loss.

The BCE loss is between 0 and 1. This causes the problem mentioned in the previous section. Instead the concept of W loss is that the discriminator’s role is changed to a critic. The output of a critic is not clipped to 0 and 1 instead the range is infinity, although it is clipped using gradient penalty and gradient clipping. This allows the gradient to not reduce to 0 and thereby preventing vanishing gradient.

The critic is modeled such that higher output values indicate fake images and lower output values indicate real image.

The objective of loss calculation is as follows:

Generator tries to maximize the output values of critic for the generated fake image. (https://stackoverflow.com/a/69558035 – Explaination on why negative of the critic pred is used as gen loss)

Critic tries maximize the difference between its prediction on fake image and real image. A gradient penalty is added to the loss function.

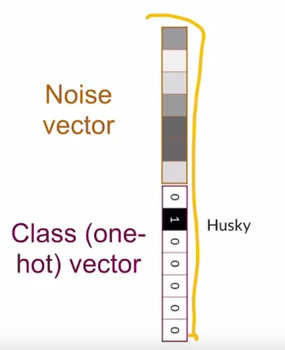

Conditional GAN

The Conditional GAN generates output image such that it falls into a certain category. Example – the user can modify the input noise vector to generate dog or cat.

It is achieved by appending a one hot encoded vector to the noise vector. The new vector will be the input to the generator.

Discriminator also uses the class information, to determine if the generated image is fake or real. The input to the discriminator is the generated image appended with one hot encoded vector. Here each element of one hot encoded vector is transformed to an array of input image size.

BCE loss is calculated and optimized in the same way explained in the first section.

Controllable GAN

Noise vector is also called as z vector. It can have different feature space z1, z2, z3 etc. We can change specific attribute in the generated image by modifying the feature spaces. Example – If we change z1, then hair color of the generated face can change.

How do we change achieve this technique ?

Supervised

A classifier can be used to determine the hair color of the generated face. Using the the gradients of the classifier, we can update noise vector. This will not affect the optimization of generator and discriminator.

An advantage is that it is simple to integrate in GAN. However the classifier should provided consistent outputs for better GAN and it is supervised.

Unsupervised

The problems with controllable GAN